Tesla Tells Drivers to Be Paranoid Amid FSD9 Release

Tesla has finally released their Full Self-Driving (FSD) 9 beta. However, it came with quite the warning and has left some quite concerned with the ramifications of unproven software being used on the open road. The new beta from the Palo Alto-based EV manufacturer has been highly anticipated by many. Now, some are concerned over the realities of unsupervised members of the public absent at the wheel of a moving vehicle.

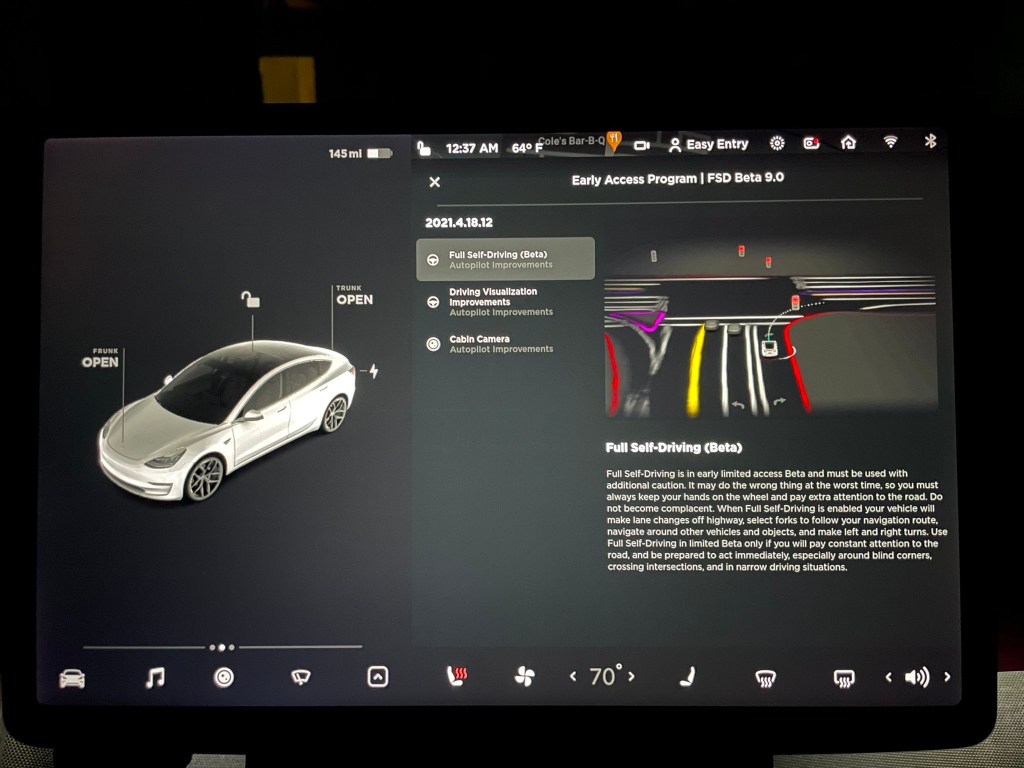

“It may do the wrong thing at the worst time”

Twitter user “tesla_raj” posted the image seen above to his account on July 10th. In it, there’s a warning to participants in the company’s new Full Self-Driving 9 beta. Now, Tesla urges owners in the warning to be vigilant and only use FSD if they are “prepared to act immediately, especially around blind corners.” Now, those last four words are rather interesting given what happened to one owner a few weeks back. The Tesla owner’s vehicle plowed straight through a sharp turn with no warning.

Yes, scary stuff indeed. However, that’s not even the scariest part of the message. In it, there’s a section that states the vehicle “may do the wrong thing at the worst time.” It’s clear Tesla is trying its best to warn owners to be vigilant and attentive, if not a little paranoid, as CEO Elon Musk put it on Twitter. Clearly, the company is aware the software is very much in a beta phase and wants owners to be as well. Points for at least giving notice, Tesla.

Tesla software has already done “the wrong thing”

However, some of Tesla’s software is known for doing exactly the worst thing at the wrong time. There was that incident with the sharp bend, to name one. To name another, there have been numerous instances of Tesla Autopilot software causing property damage, and even taking lives. Now, part of this is on owners. Evidently, Tesla has warned of the responsibility drivers have to keep an eye on things, and drivers have often failed to do so.

Additionally, Tesla shares some blame here. Well, quite a lot of it actually. A simple warning is not enough. The manufacturer has continually escaped responsibility for the effectiveness of its software, despite promising and making efforts to improve it. Frankly, Tesla needs to be held more accountable for their vehicles, regardless of who, or what is driving.

Is it ok to beta test self-drivng on open roads?

This begs the question: Should we be ok with self-driving cars on open roads? Thankfully, the beta is open only to those enrolled in the company’s early access program. By and large, a majority of those 2,000 or so people are Tesla employees, so for now that’s mostly people who know what they’re doing. However, it’s understandable that some members of the public aren’t happy about unproven beta software being tested in the presence of one of their most valuable assets.